The Institute for Trustworthy AI in Law & Society (TRAILS) is the first organization to integrate artificial intelligence participation, technology and governance during the design, development, deployment and oversight of AI systems. We investigate what trust in AI looks like, how to create technical AI solutions that build trust, and which policy models are effective in sustaining trust. In addition to UMD, GW and Morgan State, other participation in TRAILS comes from Cornell University, and private sector organizations like the DataedX Group, Planet Word, Arthur AI, Checkstep, FinRegLab and Techstars.

Workforce Development: Equity Development for TRAILS Researchers

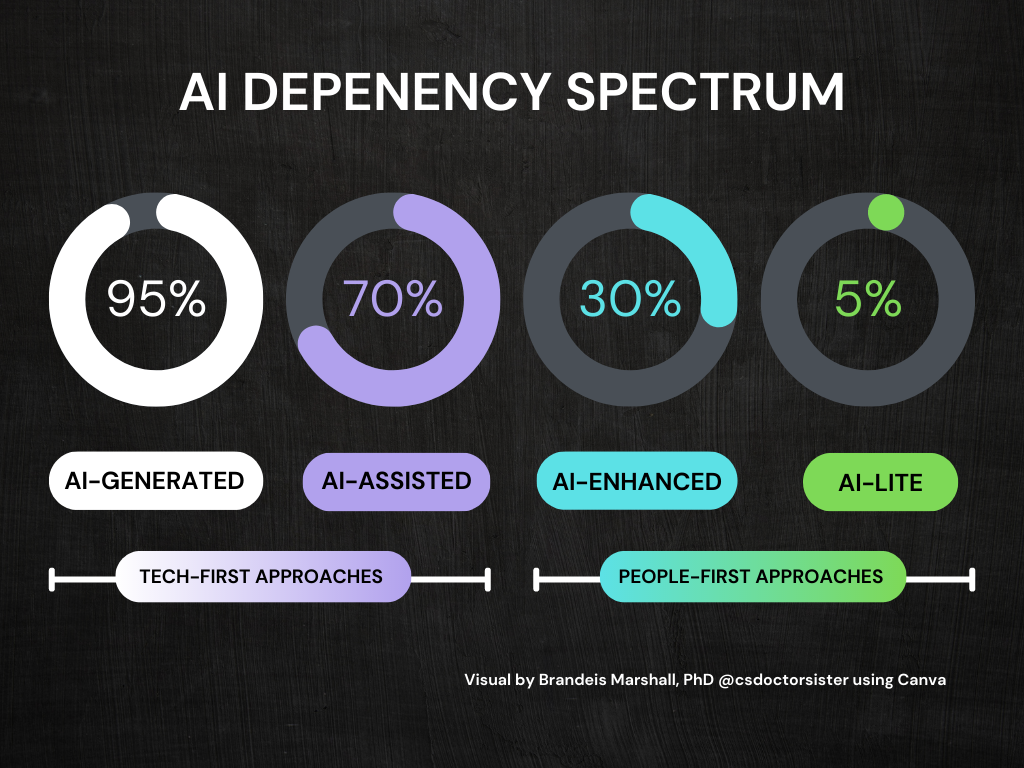

During a 3 day annual workshop, the DataedX team helps TRAILS faculty and collaborators navigate the current trends in AI-infused educational tools and platforms. AI-assisted tools and platforms, like ChatGPT, are disrupting how instructors are able to assess how much a learner knows and can apply without advanced technological assistance. Whether concerns stem from trying to understand what these tools are capable of or tackling the ethics of students cheating with generative AI assistance, educators and the education community at large (board members, administration, and parents) must understand the nuances of these tools.

Data Ethics Research: Scaffolding Community Engagement with Institute Use Cases

The fast-paced adoption of AI has left many workers uncertain of how to leverage or operationalize technologies for their own advancement. Existing professional development approaches lean heavily on mentorship activities rather than also incorporating AI/data skills development. The DataedX team will investigate learning pathways to engage communities, particularly women and historically excluded communities, with the social, technical and sociotechnical trustworthiness of the Institute’s AI use cases. DataedX Group will simultaneously examine each use case to map opportunities for communities to learn, apply, critique, and improve the systems under development.

Data Ethics Literacy Resources

The lack of infrastructure for AI learning and understanding is formed as a result of public value failures, in particular inequitable distribution of benefits and insufficient provider availability. Having inaccessible instruction dissemination avenues and culturally-competent instructors curves the participation, representation, and inclusion of certain communities. The DataedX team will help close the gap by modifying the Adoption-Intervention Continuum to be culturally responsive and broaden their understanding for practical applications. Data ethics curriculum resources will be shared several times a year.